The new competence of AI literacy for clinicians

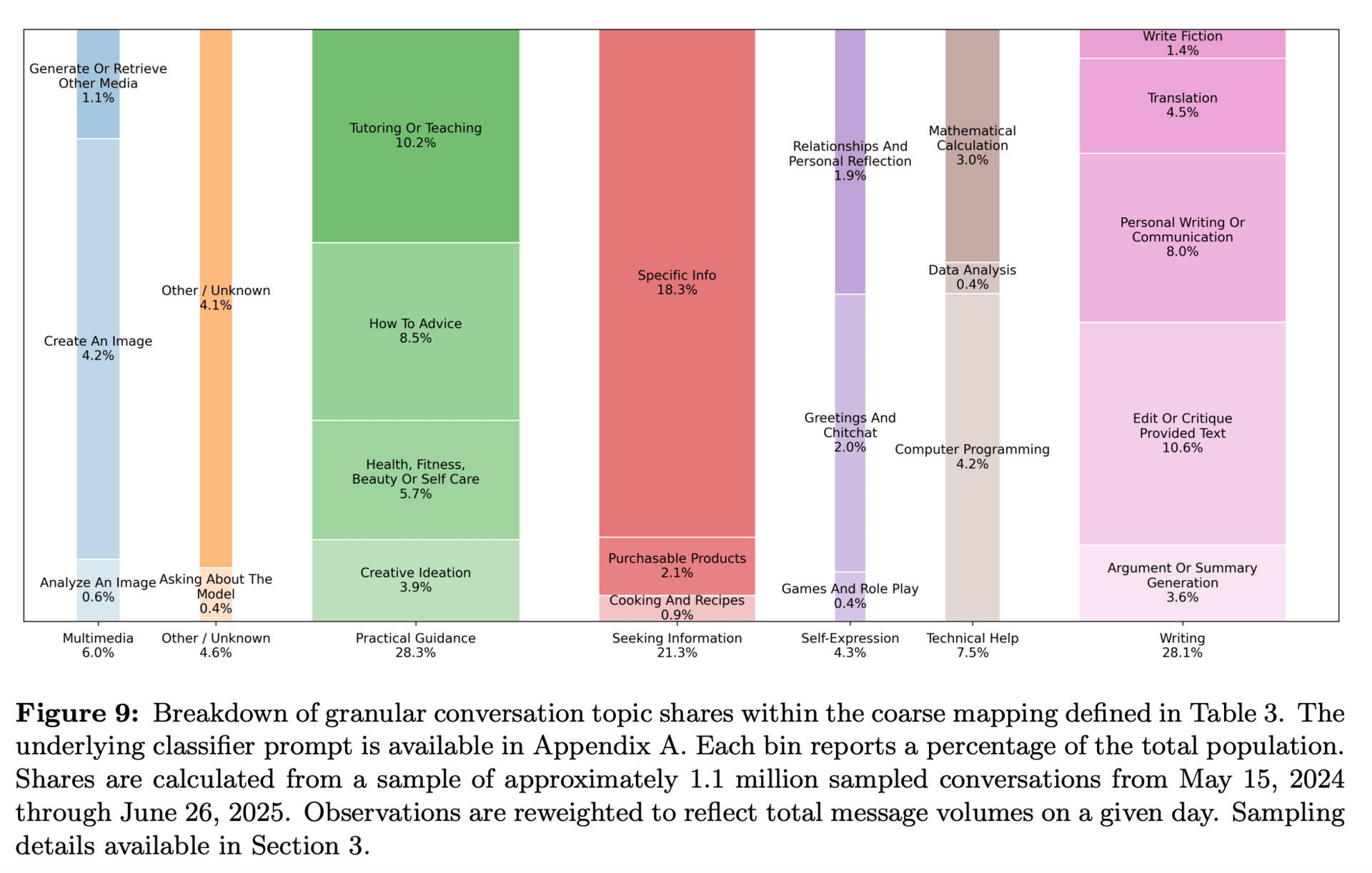

ChatGPT isn’t on the horizon—it’s already here. According to a recent NBER study, the tool now has more than 700 million weekly active users—nearly 10% of the world’s adults—and almost half are under 26. Health, fitness, and self-care are among its most common topics (NBER Study, September 2025).

Roughly 1 in 7 adults over 50 use AI to receive health information, according to a recent poll from the University of Michigan, while 1 in 4 adults under age 30 do so, according to the KFF poll.

That means the next generation of patients isn’t arriving with scattered web searches. They’re walking in with full narratives generated by AI—coherent, persuasive, and often carefully reasoned. As one physician put it: “Search returns blue links. ChatGPT delivers personalized accounts.”

Some research has shown that when patients learn their doctor used AI in care, they rate that doctor as less competent, less trustworthy, and less empathic. But let’s be honest: that perception may reflect something important. If a physician is seen as simply echoing what ChatGPT can already do, of course patients will ask—why do I need you?

The opportunity lies in showing the added value. Patients don’t want a ChatGPT proxy. They want a clinician who can critically assess, contextualize, and translate AI’s outputs into safe, personalized care.

From Dr. Google to Dr. ChatGPT

We’ve seen this movie before. Two decades ago, doctors worried about patients “Dr. Googling” symptoms. Over time, the best clinicians leaned in - helping patients separate credible sources from noise, and building trust through collaboration.

The same is true now, only the input is more sophisticated. Patients arrive with ChatGPT summaries of labs or supplement “protocols.” Sometimes those answers are right, sometimes dangerously wrong. Either way, patients expect you to be fluent enough to review, reframe, and guide.

Dismiss AI outright, and you risk sounding out of touch. Engage it wisely, and you establish yourself as the safeguard - turning skepticism into confidence.

What AI-literate clinicians do differently

AI literacy is the new professional fluency. Clinicians who thrive in this era do four things well:

Use AI as a co-pilot. Offload synthesis or patient-friendly summaries—but keep your judgment at the center.

Vet outputs critically. Treat AI like a sharp but green resident. Helpful, but never unchecked.

Explain clearly. Patients want to know you’re adding value. Saying “I used an AI tool to scan the latest studies, then validated against guidelines and my expertise” positions you as thorough, not dependent.

Lean harder on empathy. Ironically, the more AI is in the room, the more patients value context, listening, and care.

Why this matters for trust

Yes, studies show consistent negative effects on perceived competence and empathy when clinicians reveal AI use. But those effects are not inevitable. They’re strongest when the doctor doesn’t add anything beyond what the AI provides.

Flip the script, and the opposite is true. In lower-risk situations, or when clinicians show how AI augments their expertise, patients often see it as supportive. Some even believe it levels the playing field for younger clinicians and improves consistency.

The key is communication: frame AI as augmentation, not replacement. The winning posture sounds like this: “AI helps surface possibilities faster. I always verify, and together we’ll decide what makes sense for you.”

The new marker of competence

Using Google became table stakes for modern clinicians two decades ago. Today, AI is the new search bar—and half of young adults are already using it for their health.

AI literacy doesn’t weaken your authority. It redefines it. Competence in 2025 means knowing how to find, filter, and frame—not just recall—information. Clinicians who can do this while deepening empathy and judgment will set the standard of care.

The bottom line: patients may walk in skeptical. Your response determines whether AI makes you look weaker or stronger. Lead with fluency and humanity, and AI becomes not a liability—but a trust multiplier.

✅ Your Homework:

Ask one patient this week: “Did you look anything up online or use AI before coming in?” Review it together. Correct what’s off, affirm what’s useful, and explain your process. It’s a simple move that builds trust while sharpening your own AI literacy.

How I AI with Dr. Hillary Lin

Dr. Hillary Lin isn’t just a Stanford-trained physician—she’s a founder, educator, and one of the leading voices in longevity medicine. As Co-Founder and CEO of Care Core, she partners with clinics nationwide to bring evidence-based longevity protocols and AI-driven workflows to life.

In her own clinic, Hillary uses AI as a research copilot—keeping her on the frontier of longevity science without drowning in PubMed tabs. “Every patient is an N of one,” she says, “and AI helps me quickly synthesize the latest research on interventions, medications, or biomarker combinations—so I can tailor decisions to that individual.”

She cycles between ChatGPT, Claude, Grok, and Gemini depending on the task—whether that’s summarizing recent studies, checking references, or generating images for patient education. OpenEvidence? “Great for guideline-based care,” Hillary notes, “but if you’re practicing frontier longevity medicine, general LLMs—when prompted well—can be even more powerful.”

Her approach is conversational, not transactional. “I don’t think of it as one-shot prompts. It’s an ongoing dialogue. The more you refine, the more value you extract. That’s where clinicians who understand context will always outperform casual users.”

👉 Try this:

Next time you’re evaluating a new intervention, ask your LLM: “Summarize the latest peer-reviewed research on [intervention] with citations. Include outcomes, study size, and limitations.”

Then, refine: “From a longevity perspective, what does this mean for an otherwise healthy 42-year-old woman considering perimenopause HRT?”

Compare outputs from different LLMs, including OpenEvidence.

✅ Your Homework

Pick one complex patient case this week. Have your LLM synthesize the evidence base. Spend 10 minutes verifying citations—then use your expertise to shape the decision.

AI isn’t replacing your judgment. It’s clearing the noise so you can personalize care with more speed and confidence.

👉 Want to go deeper?

Looking for a solution where you have this capacity inside your EHR and don’t have to copy/paste context about your patients? Book a call with our team at Vibrant to learn if our platform might be a great fit for your practice.

This Week in Clinical AI

Some of the most interesting conversations, reads and research related to AI and clinical practice.

Using AI to heal the system, not replace doctors. (AMA Newswire) Jefferson Health president Baligh Yehia, MD, says AI is most powerful when it’s “high tech and high touch.” Two-thirds of physicians now use AI (up from 38% in 2023), with Jefferson deploying it for symptom checkers, ambient scribes, and imaging support—freeing up more time for face-to-face care.

Clinicians judge peers who lean on AI more harshly. A Johns Hopkins study of 276 clinicians found physicians using GenAI as a primary decision-maker were rated far lower in clinical skill and competence than those who didn’t use it. Framing AI as a verification tool softened the penalty, but didn’t erase it—underscoring that how AI is presented matters as much as how it’s used.

Stanford builds a benchmark for AI agents in medicine. Researchers created MedAgentBench, a virtual EHR environment testing whether AI agents can actually perform clinical tasks like retrieving labs or ordering meds. The best model (Claude 3.5 Sonnet v2) hit a 70% success rate—far from perfect, but enough to suggest AI may soon handle basic “housekeeping” in care, easing clinician workload without replacing doctors.

👋 Welcome New Readers

The Modern Clinician is written for functional, integrative, and longevity-focused physicians who want to scale their impact and deliver cutting-edge care.

If you liked this one, share it with a colleague! We appreciate you spreading the word.

To learn more about the why behind this newsletter, start with our first post introducing The Modern Clinician.